Open call by

VRHAM!:

INTERACTIVE

ARTS

First REAL-IN Ambassador Winning project

DAZZLE is an outstanding project on the intersection of immersive media, performance, choreography and fashion. This makes it the perfect fit for our first REAL-IN residency which aims to explore the possibilities of this new interactive technology provided by Dark Euphoria/Inlum.in. We believe that DAZZLE’s extraordinary creative team will challenge, explore and further develop the deployment of the new technology and will find innovative ways in which participative and interactive real-time-tracking technique can add to the overall artistic experience of the project.

Ulrich Schrauth

Founder & Creative Director, VRHAM!

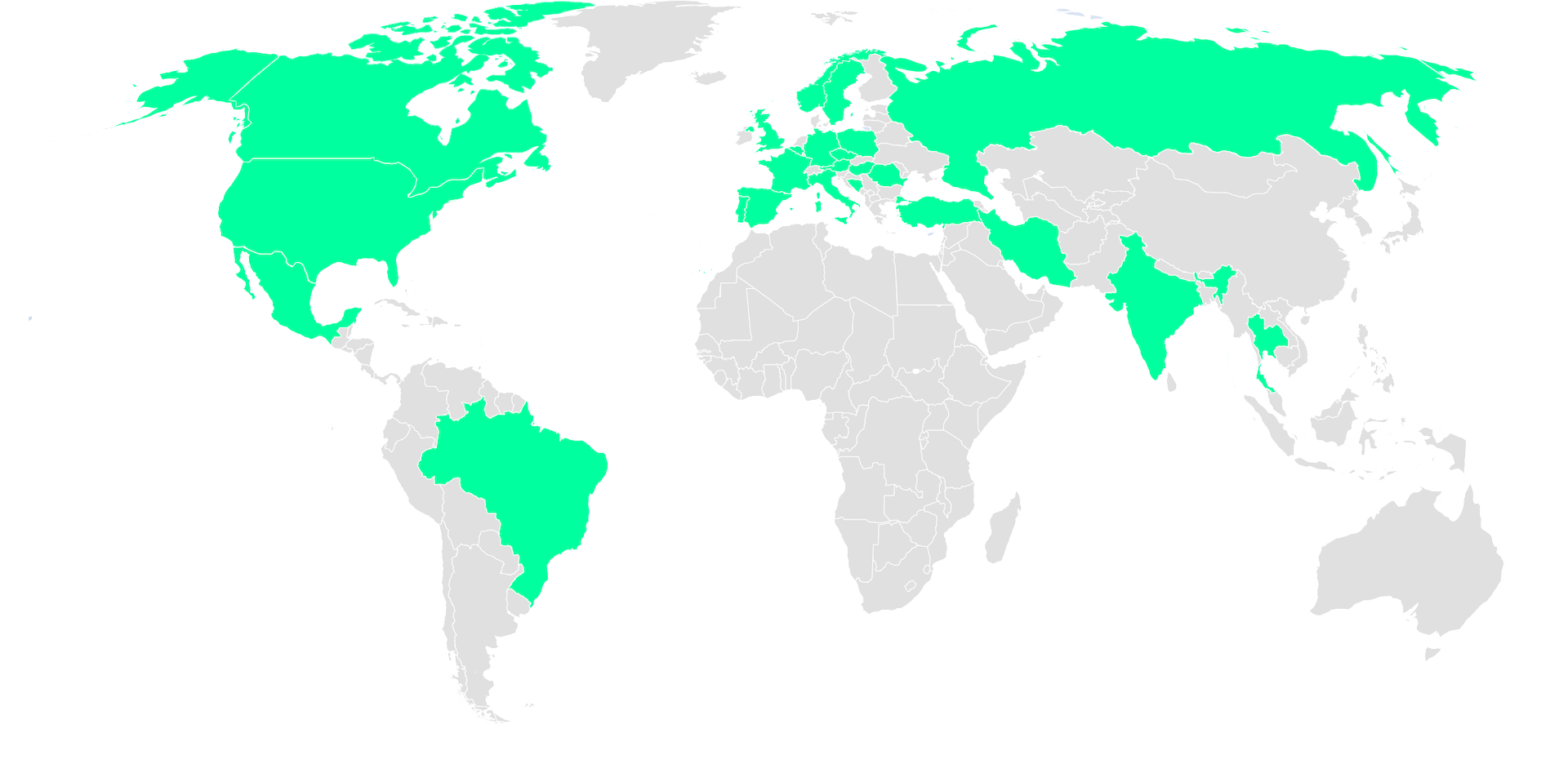

88 submitted proposals from 28 different countries

The Jury

Judith Guez

Artist-researcher, curator and developer in VR/MR

Her research focuses on understanding and creating illusions between the real and the virtual to explore new artistic forms, mobilizing the concept of presence and wonder. She has exhibited many artworks in several international venues (Ars Electronica in Austria, Gaîté Lyrique, GoogleLab, BPI Centre Pompidou, Centre des arts Enghien, MOCA Taipei in Taiwan, VR World Forum in Switzerland, She is one of the co-founders of the VRAC (VR Art Collective) and is the founder and director of the artistic departement at Laval Virtual. In this context, she has created in 2018 the international Art&VR festival Recto VRso at Laval Virtual.

Tomás Saraceno

Contemporary Artist

Tomás Saraceno’s floating sculptures and interactive art installations explore sustainable ways of inhabiting and sensing the environment. From collaborations with the air to spider/ webs, he envisions ethical relationships with the terrestrial, atmospheric, and cosmic realms. Saraceno’s community projects Aerocene and Arachnophilia furthermore invite all to deepen an understanding of environmental justice and interspecies cohabitation. In the past two decades Saraceno has collaborated with the Massachusetts Institute of Technology, Max Planck Institute, the Nanyang Technological University, the Imperial College London and the Natural History Museum London.

Lilli Paasikivi

Artistic director of the Finnish National Opera

Lilli Paasikivi has worked as Artistic Director of the Finnish National Opera since 2013.

In her work, Paasikivi has set out to reshape the structures of opera and to find ways to combine technology with opera. In 2019, Paasikivi launched Opera Beyond – a project which aims to apply new technological possibilities and tools in opera and ballet. Alongside, she has made a significant international career, performing on the leading opera and concert platforms of the world. Paasikivi was awarded the Pro Finlandia Medal in recognition of her artistic merits in 2008 and the Commander’s Badge by the Order.

Ulrich Schrauth

Artistic Director VRHAM! Festival

Ulrich Schrauth is an international curator, creative director and artist working in the field of immersive media. His posts include artistic director for VRHAM! Festival Virtual Reality & Arts Hamburg as well as XR and immersive programmer for the BFI London Film Festival. In addition to that, he oversees many international projects with virtual, augmented and mixed reality, and acts as a speaker, moderator and jury member in this field.

The winning project

DAZZLE

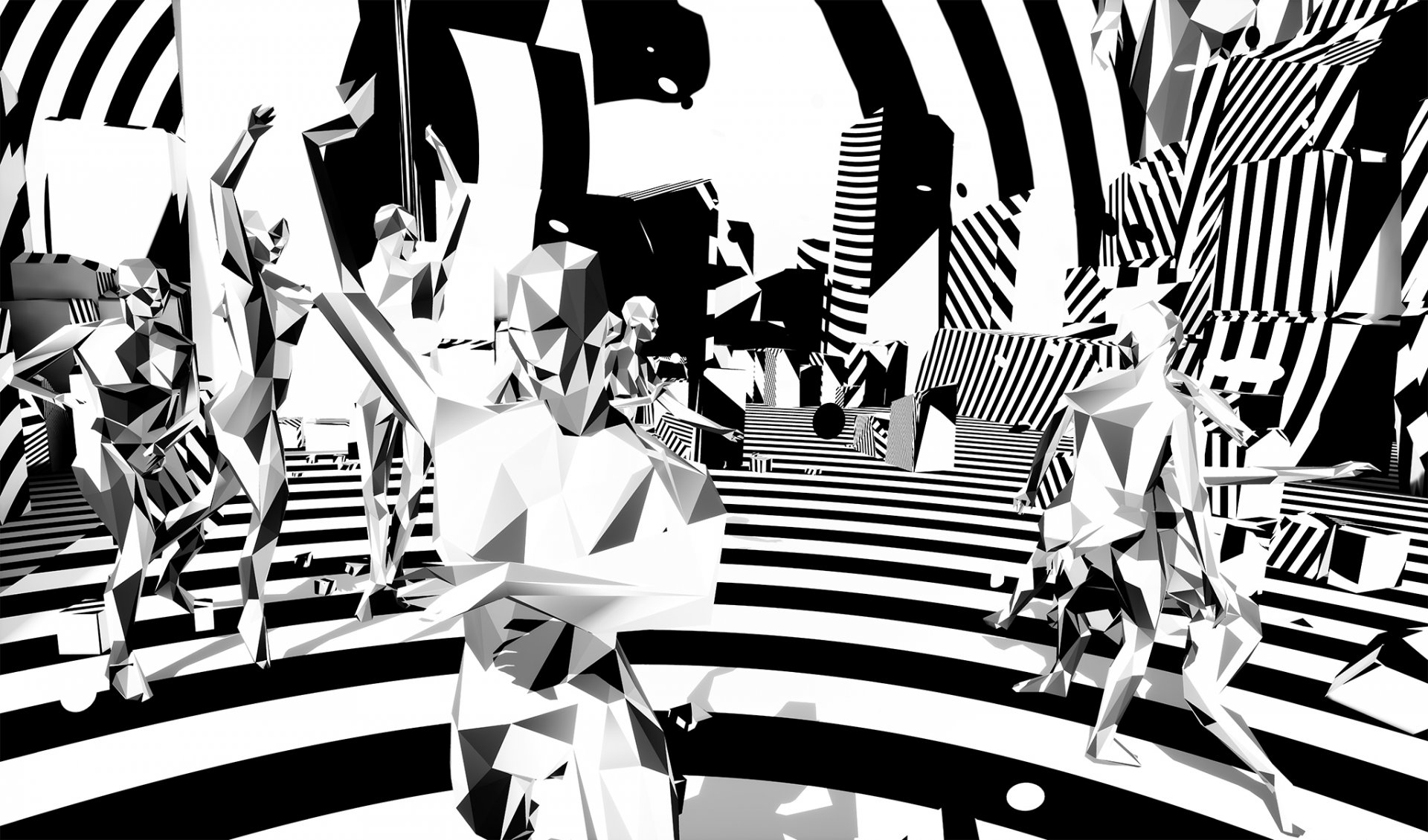

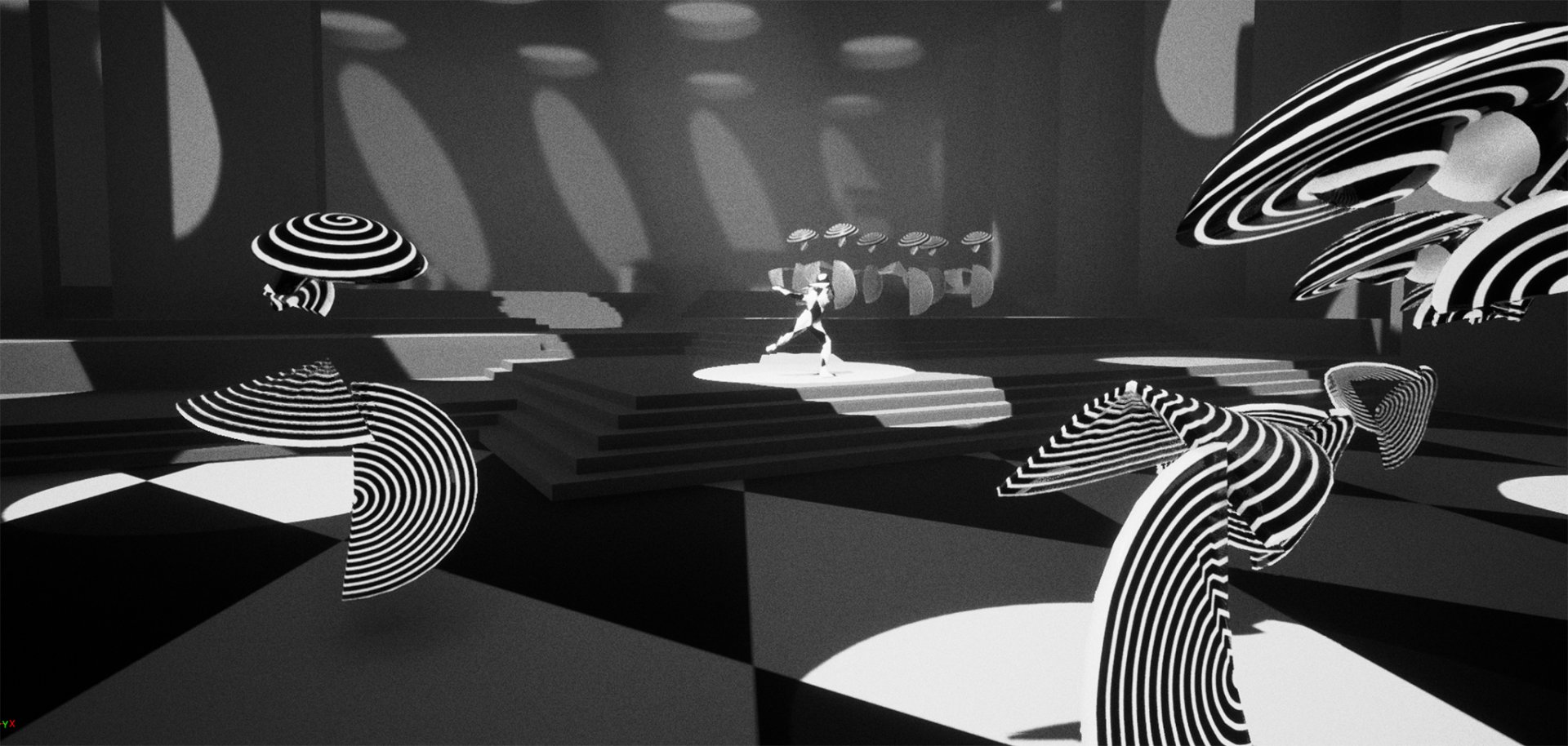

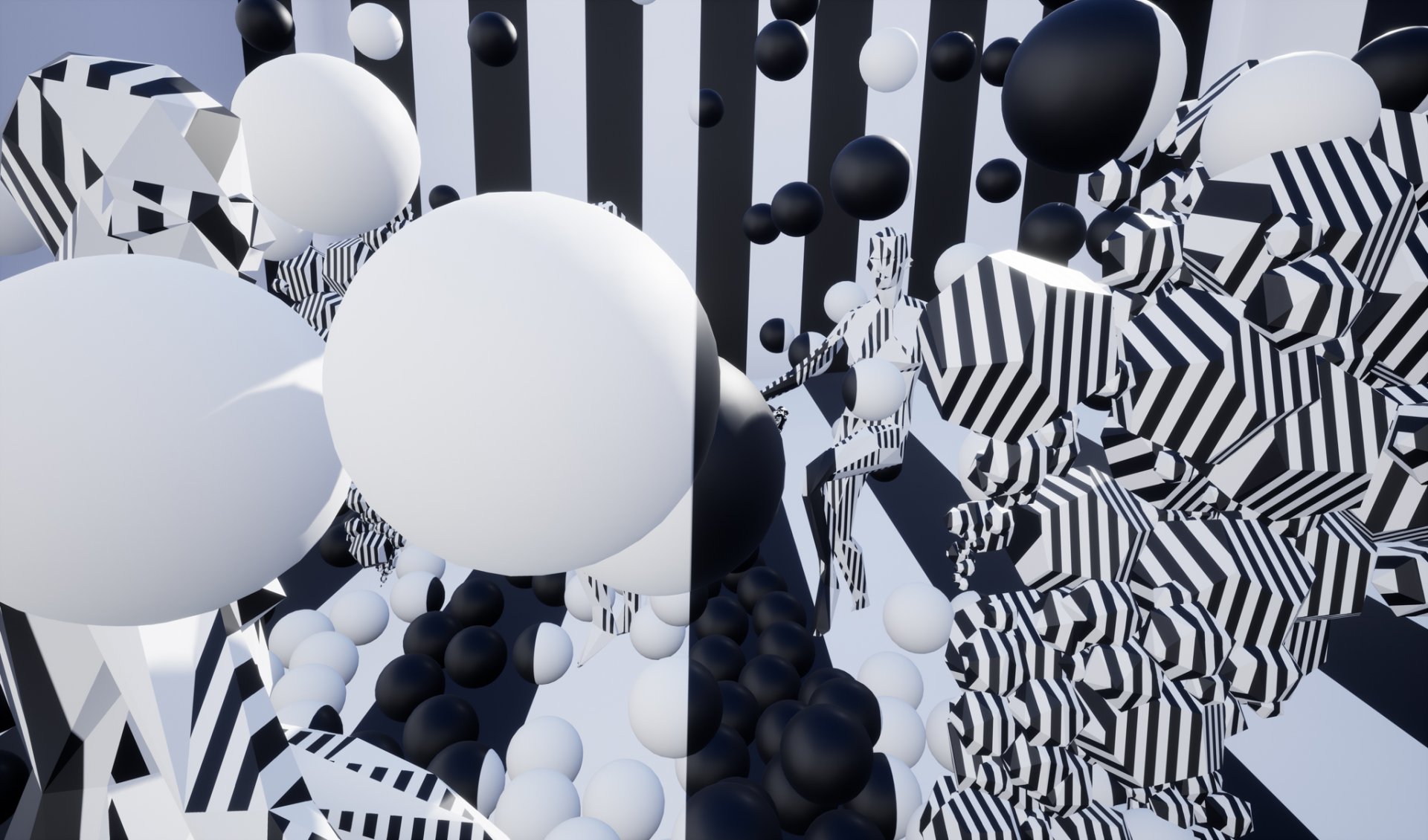

DAZZLE is an artistic project created by Gibson/Martelli Studio in collaboration with design collective Peut-Porter. Dazzle Camouflage is the main inspiration for the project, and its visual patterning, at once visceral and unsettling, provides a joyous and striking visual motif. Hand in hand with our investigations into performance technologies with DAZZLE, we physically and virtually embark on a playful journey. Our engagement with digital technologies: disrupting, augmenting and interfering uncannily with co-presence in virtual and real spaces – is causing us to reassess our perception of space, time and place.

The project takes a narrative of creativity emerging from catastrophe to celebrate the power of art to transform, exploring ideas that have particular resonance today: The uneasy relationships and blurred boundaries between real and virtual worlds; Identity and ‘camouflage’ in online and other social interactions; post-truth in the digital age; Artistic co-creation and maker culture on-and-offline.

DAZZLE crosses borders and territories by digital means. We see new art forms, styles, musical forms, political movements, and financial systems ousting what has gone before. DAZZLE reflects this wave the future of live performance enabled by new gaming technology – looking forward with an eye on the past.

– In a week-long residency in Marseille in July, the British team continued their work under the guidance of award-winning production company Dark Euphoria.